This doc explains what exactly happens in a Peoplesoft

Upgrade. This is generic to all Apps Upgrade but for explanations I am taking

example of HR90-HR92

How many DBs we need for an initial upgrade?. Let’s say I am

doing a HR90 (Tools 848)-HR92 Upgrade (Tools853)

2. Old

Demo DB (HR90Demo DB)

3. New

release Demo (HR92 Demo DB)

What I am trying here is to explain the important steps

involved in a Peoplesoft Upgrade and in depth what these steps do. Since there is

couple of changes introduced in Peoplesoft 9.2 the article is based on 9.2

upgrades

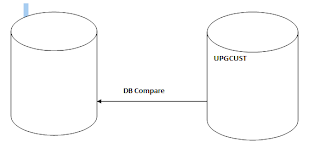

UPGCUST lifecycle

during Upgrade

UPGCUST is a very important project throughout the upgrade

process and is the main project which handles customization during the upgrade

process. Peoplesoft takes care of customization on app objects during the

upgrade process.

In first Chapter UPGCUST project is created in your target DB by comparing Copy of Production against the Copy of Current Demo database. So that means UPGCUST will have all the customized objects and will remain in target DB

Now In chapter Tools Upgrade target is converted to same

tools as that of source. In this case 8.53.So what we have now

- In target we have UPGCUST which contains all customization

- We have a new release Demo which have all new objects which we need

Now at step Running New release UPGCUST compare we compare

UPCUST project in COP with new release demo. This compare report needs to be

analyzed in depth and have to decide which customizations to keep and which one

to delete. This may need involvement

from Dev/Functional teams. Once the decisions

are made UPGCUST with the customizations we decided to preserver will get

copied to New release Demo. So Now the

new release demo have all customizations in COP+ All the new objects.

SQL Renames

Why we do some SQL renames in chapter1.

This step renames the physical table to a “temporary” name

at database level. This step is needed

to handle when a record name is changed in new release. Let me explain with an

example

Let’s say a record PS_RECORD1 in Peoplesoft 9.0 is renamed

to PS_RECORD2 in peoplesoft9.2. That means PS_RECORD2 should have PS_RECORD1

data after the upgrade process. Let’s see how this happens

- PS_RECORD1 exists in 9.0. In chapter1 we normally rename PS_RECORD1 to PS_RECORD1_TMP at database level.

- When new release definitions + customizations gets copied to COP RECORD2 will get copied and RECORD1 will get deleted from COP

- During create table step PS_RECORD2 will get built but will be empty

- Now data gets populated in PS_RECORD2 during DC from temporary table which got created in step1

Data mover Renames

You can see field renames using datamover also. This is

needed when a field is renamed in the new release.

For example lets say a field FIELD1 is renamed to FIELD2 in

9.2. So when the upgrade is complete ideally all records using FIELD 1 should

have FIELD2 and data from FIELD1.

Lets assume we don’t use DM rename

- In this case all records which use FIELD 1 will get replaced by new column FIELD2 when new release import happens

- Now when we alter the record what it will do is, it will drop column FIELD1 (as its not there in appdesigner after new release import) and add a new column FIELD2 (as FIELD2 gets copied during new release import), Since alter don’t know from anywhere FIELD2 is the new name of FIELD1. This it will result in value loss

--

-- Alters for record

PS_RECORD

-- FIELD2- add

-- FIELD1 - delete

-- Start the

Transaction

-- Create temporary

table

CREATE TABLE

PSY_RECORD (OTHERFIELD VARCHAR(10) NOT NULL

FIELD2 VARCHAR2(10)

NOT NULL,

) TABLESPACE XXX

STORAGE (INITIAL

40000 NEXT 100000 MAXEXTENTS UNLIMITED

PCTINCREASE 0) PCTFREE 10

PCTUSED 80

/

-- Copy from source to

temp table

INSERT INTO PSY_RECORD

(

OTHERFIELD,

FIELD2,

)

SELECT

OTHERFIELD,

' '------ If you see here FIELD2 gets populated with default value and not with the FIELD1 value which results in data loss.

' '------ If you see here FIELD2 gets populated with default value and not with the FIELD1 value which results in data loss.

FROM PS_RECORD

/

-- CAUTION: Drop

Original Table

DROP TABLE PS_RECORD

/

-- Rename Table

RENAME PSY_RECORD

PS_RECORD

/

Now ideally we need FIELD1 data to FIELD2 to avoid data loss

during field rename. This is where rename of datamover comes in place

- In Chapter we rename FIELD1 to FIELD2 using datamover. This makes an entry in PSOBJCHNG table to indicate FIELD1 is renamed to FIELD2.

- Now all the records will have FIELD2 instead of FIELD1 and after new release import when we alter any such record, it first checks PSOBJCHNG and see that there is an entry for FIELD2 and it identifies that it’s a rename of FIELD1. So in this case FIELD2 is populated with FIELD1 values during alter

SQL> select

count(*) from PSOBJCHNG;

COUNT(*)

----------

0

_________________________________________

rename field

recordname.fieldname as newfieldname;

Started: Fri Mar 22 08:00:13 2013

Data Mover Release:

8.53

Database: PF9U (ENG)

Rename FIELD1

-> FIELD2

Ended: Fri Mar 22

08:00:15 2013

Successful completion

Script Completed.

_________________________________________

SQL> select * from

PSOBJCHNG;

E OLDNAME NEWNAME

- ------------------

------------------

OBJCHG_STAMP

---------------------------------------------------------------------------

OLDNAME2

------------------

3 FIELD1 FIELD2

22-MAR-13

08.00.15.208645 AM

RECORD1

_______________________________________________

Copy the new

definition (FIELD2) to target by app designer during upgrade

________________________________________________

Alter

________________________________________________

-- Do not perform

deletes at this time

--

-- Alters for record

PS_GC_ACCT_BAL_TBL

-- FIELD2 - add to rename FIELD1

(76,51)

-- FIELD1 - delete to rename FLAG1

(76,51)

-- Start the

Transaction

-- Create temporary

table

CREATE TABLE

PSY_RECORD (OTHERFIELD VARCHAR2(5) NOT NULL,

FIELD2 VARCHAR2(10) NOT NULL) TABLESPACE XXX

STORAGE (INITIAL

40000 NEXT 100000 MAXEXTENTS UNLIMITED

PCTINCREASE 0) PCTFREE 10

PCTUSED 80

/

-- Copy from source to

temp table

INSERT INTO

PSYGC_ACCT_BAL_TBL (

OTHERFIELD,

FIELD2

)

SELECT

OTHERFIELD,

FIELD1

FROM PS_GC_ACCT_BAL_TBL

/

-- CAUTION: Drop

Original Table

DROP TABLE PS_RECORD

/

-- Rename Table

RENAME PSY_RECORD TO

PS_RECORD

/

-- Done

ALLTABS Build

ALLTABS is a build process which handles the following

Alter existing

tables to:

ADD new columns

CHANGE columns

RENAME

columns/tables

So this is a build process where we don’t do any deletes so let’s

see why we are not doing deletes

Let’s say a RECORD RECORD1 have the following structure in

COP

RECORD1

FIELD1 VARCHAR(10)

FIELD2 NUMBER

FIELD3 VARCHAR(5)

The same record when it came to new release has the

following structure in source

RECORD1

FIELD1 VARCHAR(10)

FIELD2 NUMBER

NEWFIELD VARCHAR(10)

So in target DB we will have a table PS_RECORD1 with the

same structure as shown above with three fields. Now RECORD1 will get

overwritten when new release import happens and will have two fields. That

means RECORD1 in Appdesigner will have two fields after new release copy and

PS_RECORD1 will have three fields in COP DB. Now during all tabs alter we don’t

select deletes. So PS_RECORD gets built and won’t delete FIELD3 at this point

of time. So PS_RECORD after ALLTABS will have a hybrid structure as shown below

RECORD1

FIELD1 VARCHAR(7)

FIELD2 NUMBER

FIELD3 VARCHAR(5)

NEWFIELD VARCHAR(10)

Why we need FIELD3 is because in DC this field will be used

and data will get pumbed to other field or other tables as per new design. Once

the data from this field is handled properly build process after DC will delete

this extra field.

As I already mentioned if there were any field renames in

chapter1 those will be handled by ALLTABS build as explained above.

ALLTABS also handles datatype changes which can be handled

buy build process. Such as say a field is changed from varchar to char or

length of a field is changed in new release . All the field datatype or

datalength changes which can be handled by alter by table rename can be handled

by ALLTABS alter.

But there will be some complex datatype changes or data

length changes which cannot be handled by ALLTABS alter. Say for example a LOB

field is changed to number in new release. We cannot simply do a insert from

select as we normally do in ALLTABS alter. These are complex transformation and

DC handles these.

Data Conversion

Process

As the name indicates data conversion is the process of

converting data in COP to the new structure.

In order to convert data we typically need old release data (and

columns/tables which we preserver by renaming) and the new release

tables/columns which will come as part of new release import. In this way DC

app engines can read from old and write to the new.

ALLTABS build take care of normal conversions which can be

carried out directly through build SQLs, like just increasing the coumns size

and possible data type changes. What DC handles will be difficult conversions

and conversions which needs some logic when moving to new release.

What Happens after DC

After DC there may new set old setup data that we need to

export from new demo and import in target.

Alters after DC

So after DC the next alter handles deletes also. In alltabs

alter the extra columns where not deleted as they were needed in DC and in this

alter the columns which are not needed are deleted as DC is over. This will

complete the structural changes.